Slide-Seq Mouse Olfactory Bulb - multiple pucks¶

This example uses TACCO to annotate and analyse mouse olfactory bulb Slide-Seq data (Wang et al.) with mouse olfactory bulb scRNA-seq data (Tepe et al.) as reference.

(Wang et al.): Wang IH, Murray E, Andrews G, Jiang HC et al. Spatial transcriptomic reconstruction of the mouse olfactory glomerular map suggests principles of odor processing. Nat Neurosci 2022 Apr;25(4):484-492. PMID: 35314823

(Tepe et al.): Tepe B, Hill MC, Pekarek BT, Hunt PJ et al. Single-Cell RNA-Seq of Mouse Olfactory Bulb Reveals Cellular Heterogeneity and Activity-Dependent Molecular Census of Adult-Born Neurons. Cell Rep 2018 Dec 4;25(10):2689-2703.e3. PMID: 30517858

[1]:

import os

import sys

import pandas as pd

import numpy as np

import anndata as ad

import tacco as tc

# The notebook expects to be executed either in the workflow directory or in the repository root folder...

sys.path.insert(1, os.path.abspath('workflow' if os.path.exists('workflow/common_code.py') else '..'))

import common_code

Load data¶

[2]:

data_path = common_code.find_path('results/slideseq_mouse_olfactory_bulb')

reference = ad.read(f'{data_path}/reference.h5ad')

pucks = ad.concat({f.split('.')[0]: ad.read(f'{data_path}/{f}') for f in os.listdir(data_path) if f.startswith('puck_') and f.endswith('.h5ad')},label='puck',index_unique='-')

[3]:

pucks.obs['replicate'] = pucks.obs['puck'].str.split('_').str[1].astype(int)

pucks.obs['slide'] = pucks.obs['puck'].str.split('_').str[2].astype(int)

unique_replicates = sorted(pucks.obs['replicate'].unique())

unique_slides = sorted(pucks.obs['slide'].unique())

pucks.obs['replicate'] = pucks.obs['replicate'].astype(pd.CategoricalDtype(unique_replicates,ordered=True))

pucks.obs['slide'] = pucks.obs['slide'].astype(pd.CategoricalDtype(unique_slides,ordered=True))

pucks.obs['puck'] = pucks.obs['puck'].astype(pd.CategoricalDtype([f'puck_{r}_{s}' for r in reversed(unique_replicates) for s in unique_slides],ordered=True))

Get a first impression of the spatial data¶

Plot total counts

[4]:

pucks.obs['total_counts'] = tc.sum(pucks.X,axis=1)

pucks.obs['log10_counts'] = np.log10(1+pucks.obs['total_counts'])

[5]:

fig,axs = tc.pl.subplots(len(unique_slides),len(unique_replicates), x_padding=1.5)

fig = tc.pl.scatter(pucks, 'log10_counts', group_key='puck', cmap='viridis', cmap_vmin_vmax=[1,3], ax=axs[::-1].reshape((1,40)));

Plot marker genes

[6]:

cluster2type = reference.obs[['ClusterName','type']].drop_duplicates().groupby('type')['ClusterName'].agg(lambda x: list(x.to_numpy()))

type2long = reference.obs[['type','long']].drop_duplicates().groupby('long')['type'].agg(lambda x: list(x.to_numpy()))

[7]:

marker_map = {}

for k,v in type2long.items():

genes = []

if '(' in k:

genes = k.split('(')[-1].split(')')[0].split('/')

marker_map[v[0]] = [g[:-1] for g in genes]

[8]:

pucks.obsm['type_mrk'] = pd.DataFrame(0.0, index=pucks.obs.index, columns=sorted(reference.obs['type'].unique()))

for k,v in marker_map.items():

for g in v:

pucks.obsm['type_mrk'][k] += pucks[:,g].X.A.flatten()

total = pucks.obsm['type_mrk'][k].sum()

if total > 0:

pucks.obsm['type_mrk'][k] /= total

[9]:

fig,axs=tc.pl.subplots(len(unique_slides),len(unique_replicates))

tc.pl.scatter(pucks, 'type_mrk', group_key='puck', ax=axs[::-1].reshape((1,40)), compositional=True);

Annotate the spatial data with compositions of cell types¶

Annotation is done on cluster level to capture variation within a cell type…

[10]:

tc.tl.annotate(pucks, reference, 'ClusterName', result_key='ClusterName',)

Starting preprocessing

Annotation profiles were not found in `reference.varm["ClusterName"]`. Constructing reference profiles with `tacco.preprocessing.construct_reference_profiles` and default arguments...

Finished preprocessing in 160.89 seconds.

Starting annotation of data with shape (1421244, 15437) and a reference of shape (51426, 15437) using the following wrapped method:

+- platform normalization: platform_iterations=0, gene_keys=ClusterName, normalize_to=adata

+- multi center: multi_center=None multi_center_amplitudes=True

+- bisection boost: bisections=4, bisection_divisor=3

+- core: method=OT annotation_prior=None

mean,std( rescaling(gene) ) 23.102077197895326 276.93135100039547

bisection run on 1

bisection run on 0.6666666666666667

bisection run on 0.4444444444444444

bisection run on 0.2962962962962963

bisection run on 0.19753086419753085

bisection run on 0.09876543209876543

Finished annotation in 772.18 seconds.

[10]:

AnnData object with n_obs × n_vars = 1421244 × 17001

obs: 'x', 'y', 'puck', 'replicate', 'slide', 'total_counts', 'log10_counts'

obsm: 'type_mrk', 'ClusterName'

varm: 'ClusterName'

… and then aggregated to cell type level for visualization

[11]:

tc.utils.merge_annotation(pucks, 'ClusterName', cluster2type, 'type');

tc.utils.merge_annotation(pucks, 'type', type2long, 'long');

[12]:

fig,axs=tc.pl.subplots(len(unique_slides),len(unique_replicates))

tc.pl.scatter(pucks, 'type', group_key='puck', ax=axs[::-1].reshape((1,40)));

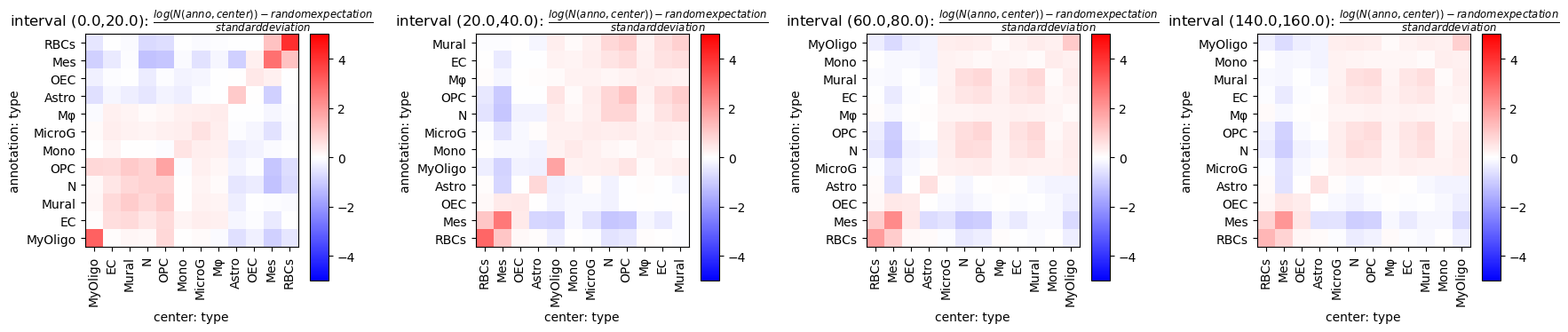

Analyse co-occurrence and neighbourhips¶

Calculate distance matrices per sample and evaluate different spatial metrics on that. Using sparse distance matrices is useful if one is interested only in small distances relative to the sample size.

[13]:

tc.tl.co_occurrence(pucks, 'type', sample_key='puck', result_key='type-type',delta_distance=20,max_distance=1000,sparse=False,n_permutation=10)

co_occurrence: The argument `distance_key` is `None`, meaning that the distance which is now calculated on the fly will not be saved. Providing a precalculated distance saves time in multiple calls to this function.

calculating distance for sample 1/40

calculating distance for sample 2/40

calculating distance for sample 3/40

calculating distance for sample 4/40

calculating distance for sample 5/40

calculating distance for sample 6/40

calculating distance for sample 7/40

calculating distance for sample 8/40

calculating distance for sample 9/40

calculating distance for sample 10/40

calculating distance for sample 11/40

calculating distance for sample 12/40

calculating distance for sample 13/40

calculating distance for sample 14/40

calculating distance for sample 15/40

calculating distance for sample 16/40

calculating distance for sample 17/40

calculating distance for sample 18/40

calculating distance for sample 19/40

calculating distance for sample 20/40

calculating distance for sample 21/40

calculating distance for sample 22/40

calculating distance for sample 23/40

calculating distance for sample 24/40

calculating distance for sample 25/40

calculating distance for sample 26/40

calculating distance for sample 27/40

calculating distance for sample 28/40

calculating distance for sample 29/40

calculating distance for sample 30/40

calculating distance for sample 31/40

calculating distance for sample 32/40

calculating distance for sample 33/40

calculating distance for sample 34/40

calculating distance for sample 35/40

calculating distance for sample 36/40

calculating distance for sample 37/40

calculating distance for sample 38/40

calculating distance for sample 39/40

calculating distance for sample 40/40

[13]:

AnnData object with n_obs × n_vars = 1421244 × 17001

obs: 'x', 'y', 'puck', 'replicate', 'slide', 'total_counts', 'log10_counts'

uns: 'type-type'

obsm: 'type_mrk', 'ClusterName', 'type', 'long'

varm: 'ClusterName'

[14]:

fig = tc.pl.co_occurrence(pucks, 'type-type', log_base=2, wspace=0.25);

[15]:

fig = tc.pl.co_occurrence_matrix(pucks, 'type-type', score_key='z', restrict_intervals=[0,1,3,7],cmap_vmin_vmax=[-5,5], value_cluster=True, group_cluster=True);

[ ]: